With my initial focus on the Rust API, I made sure to enable Github CI and ensure my test suite would run its full gamut of tests on every push of a commit. Other good practices involve

- Use of ENV vars (this is pretty basic)

- Use of dotenv, for both dev and production, of course.

- Taking a Docker first approach (more on this later...)

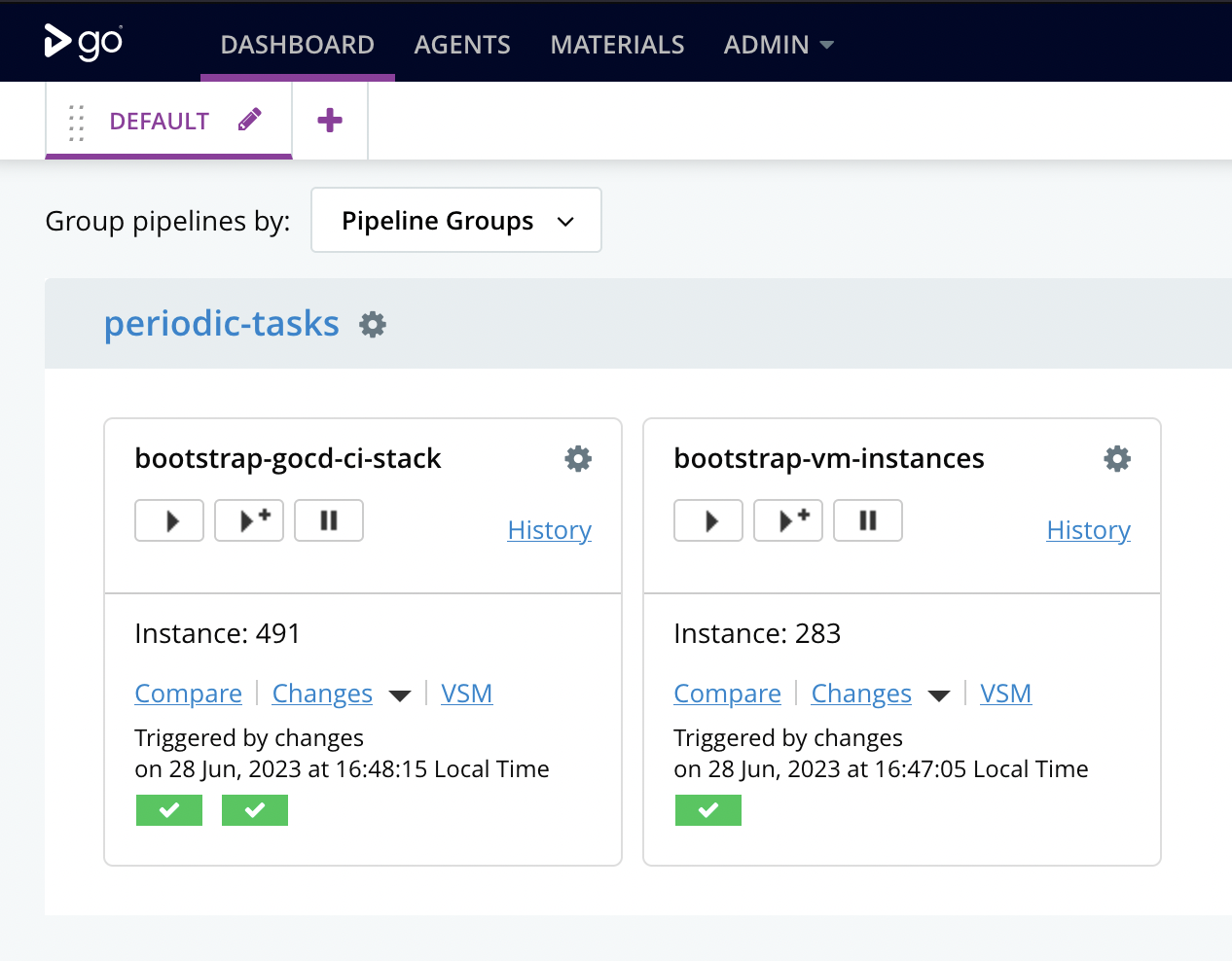

Of course, an ideal DevOps solution would introduce a build-pipeline; there are many options in this space from the likes of Jenkins to GoCD, although a local GitLab instance can do all of this including hosting of a container registry. Instead, I decided to take a "scrappier" approach (for now), with simply Docker. For those wondering, my local GoCD instance hasn't been involved in this project (yet).

The Homelab Stack

My Homelab production stack consists of XCPng (XenServer) running on a Dell R740-xd compute slice, running an instance of Debian.

For anything commercial/critical one should use a tool like Packer to ensure binaries are compiled and curated rather than pulled in from external sources. This is to aid in mitigating "Supply Chain" based attacks. For my purposes, this is something I haven't put too much effort into (yet), and have to chalk this down on the list of compromises made.

Since the inception of the homelab, I have been working on extending a mature collection of Ansible playbooks and adapting/extending these along for my purposes.

Automated Enforced Linux Configuration with Ansible (from Packages to Security)

The most basic form is what I call "metal" - the bare bone basics you would want to include on any new OS install. This covers pretty much everything from SSH users, to UFW rules, basically all the things you'd do during the first five minutes of installing any LTS Linux.

GoCD is used to run the Ansible playbooks which are scheduled accordingly as timed jobs, thereby ensuring all hosts running any services have the same basic "metal" configs in place.

Of course, the bare-bones VM playbook does take things further, for example, one can specify unique-host based ports that need to be allowed in UFW (in addition to common open ports, such as SSH). This looks a bit like this

- hosts: vm-instances

vars:

# redacted...

become: true

gather_facts: false

roles:

- { role: users, tags: ["users"] }

- { role: security, tags: ["security"] }

- { role: hostname, tags: ["hostname"] }

- { role: devtools, tags: ["devtools"] }

- { role: timezone, tags: ["timezone"] }

- { role: ntp, tags: ["ntp"] }

- { role: python, tags: ["python"] }Docker (with Docker Compose)

During my initial testing with the Rust API, the primary Dockerfile is in its codebase. The frontend is assumed to be located in a directory located at

../frontend relative to the Rust API's git repo.This side-by-side approach is quite common, and the main Dockerfile simply expects the frontend image to be build in-place. Similarly, the Rust API is also built in-place.

If/when we want to introduce a build-pipeline, this step would be broken up into separate segments, i.e. (1) Each image is built via separate build pipelines, and they'll push the Docker images into a Container Registry (2) the deploy action will simply pull the latest images from the Container Registry and continue with an orchestrated deploy (as needed).

To keep things simple, I'm doing all of the above on the same host and skipping the data-traffic between Container Registries and associated network traffic (ironically, in production AWS accounts this cost can become rather large; I've seen clients having bills in excess of $5,000 just for traffic).

Offloading TLS using HAProxy and pfSense

At the very ingress of my network sits pfSense, and over the years this has grown an evolved into taking care of many functions, lately TLS offloading for various VM and internal services.

CORS in Axum

I'm using a redacted domain

internal.io and the frontend will attempt to access sub-domains including the Rust API, therefore configuring CORS is a relatively simple task in Axum. Keen eyed will also notice that I configure the middleware in a similar manner.fn allow_cors(router: Router) -> Router {

let origins = [

"http://localhost:9001".parse().unwrap(),

"https://tidy.internal.io".parse().unwrap(),

];

let cors = CorsLayer::new()

.allow_origin(origins)

.allow_headers(Any)

.allow_methods(Any);

router.layer(cors)

}

pub async fn serve(config: &AppConfig, addr: &str, handle: MyActorHandle) {

let mut app = api_router();

app = allow_cors(app);

app = add_middleware(config, app, handle);

axum::Server::bind(&addr.parse().unwrap())

.serve(app.into_make_service())

.await

.unwrap();

}Automated Backups to AWS S3

I created two separate scripts that run as cronjobs on the deployment host, that (1) Export the Postgres DB and compress into a tar.gz archive and push this into S3 and (2) repeat the same process for the cached directory of assets. Local tar.gz archives are wiped from disk after the upload; this saves on continually eating up precious disk space.

However, it's worth noting that in the event the assets backup didn't exist, one can use details within the DB and S3 buckets to script and pull down effectivity re-creating the cached asset store (both uploaded images and QR code images). Thankfully, I won't need to address this bit of busywork (if I can do anything to save on time, that's a win!).

Technical Debt?

We all have these and the most glaring one is that I need to put more time/effort into is the ElasticSearch container setup. The reason is, this needs a custom Dockerfile to install "plugins" to allow backing up the ES index to AWS S3, which can be configured via the Kibana UI.

Shipping Logs into a Greylog server would be nice to have as well.